Welcome to the club if you are still behind the artificial intelligence curve. This is the last chapter of my AI series, and I hope it has shed a humble light upon the linchpin of the Fourth Industrial Revolution (4IR). Included below are links to previous installments. You do not want to miss the mini-documentary in part 3. Keep the following quotes in mind as I prognosticate today on AI jobs for the near-term.

“I have all the tools and gadgets. I tell my son, who is a producer. You never work for the machine; the machine works for you.” – Quincy Jones

“Nobody phrases it this way, but I think that artificial intelligence is almost a humanities discipline. It’s really an attempt to understand human intelligence and human cognition.” – Sebastian Thrun

“A year spent in artificial intelligence is enough to make one believe in God.” – Alan Perlis

- Part 1: Apr. 13 – Artificial Intelligence is Coming for Your Clothes, Boots, Harley, and Jobs

- Part 2: Apr. 25 – Artificial Intelligence is Coming for Your Brain

- Part 3: May 25 – AI: The Greatest Job Disruptor in History Now – Mini-Doc by the CrushTheStreet Team

- Part 4: May 31 – Artificial Intelligence – “End of the Job” May Not be What You Think – Learn a Trade

AI technology is extremely young. The future will overflow with incredible achievements if utilized for good, or create destruction if managed by zealots. Do not minimize the impact on individual privacy and liberties that may worsen if we go Borg by plugging our bodies into the matrix with microchips. If you are in secondary school and thinking about a career in technology, early on in your college education, or young enough to train up into the industry, you are responsible for our future. Please handle it wisely. The specific field of study to focus on is up to you, as well as ferreting job titles and skillset requirements.

We still do not know much about the jobs the AI economy will make—or take… “If you want to determine the true impact the AI revolution will have on the US economy, well, you may have to wait a bit. That was, essentially, the message from experts speaking today at MIT Technology Review’s EmTech Next conference in Cambridge, Massachusetts, where they discussed the future of work and the changes—expected and as yet unknown—that artificial intelligence, robotics, and other emerging technologies will bring to the US job market. It is hard to say whether AI will bring about a kind of technological shakeup different from ones we have seen in the past. It looks broadly similar, but we still do not know much about what an AI-based economy will look like, including how much companies will have to invest in things like buildings and equipment, and what kind of labor will be in demand. Coal mining was different from auto manufacturing, different from retailing, and an AI-based economy will be different from the other.” – MIT Technology Review, Jun. 2018

What we do know is AI requires the same education a child receives over the course of many years. Here are some areas where employment opportunities will be generous, especially over the next decade or so as data foundations are developed.

Natural language generation (NLG) produces language as output based on data input. What is new is the creation of interfaces that engage users. Recent developments that rely on NLG are Alexa and Siri, which are cloud-based voice services available on millions of devices.

Bots That Can Talk Will Help Us Get More Value from Analytics… “Conversations with systems that have access to data about our world will allow us to understand the status of our jobs, our businesses, our health, our homes, our families, our devices, and our neighborhoods — all through the power of NLG. It will be the difference between getting a report and having a conversation. The information is the same but the interaction will be more natural.” – Harvard Business Review, Nov. 2016

Here is Why Natural Language Processing is the Future of Business Intelligence… “Every time you ask Siri for directions, a complex chain of cutting-edge code is activated. It allows ‘her’ to understand your question, find the information you are looking for, and respond to you in a language that you understand. This has only become possible in the last few years. Until now, we have been interacting with computers in a way that they understand, rather than us. We have learnt their language. But now they’re learning ours.” – SISENSE, Apr. 2018

Speech recognition is the ability to identify words and phrases from spoken language and translate them into readable formats or interface with NLG technology. We now use it in interactive voice response systems and mobile applications. The next generation of software will have the ability to accept natural speech patterns instead of rudimentary, clearly-spoken language.

A Complete Guide to Speech Recognition Technology… “Think about how a child learns a language. From day one, they hear words all around them. Parents speak to their child, and, although the child does not respond, they absorb all kinds of verbal cues such as intonation, inflection, and pronunciation. Their brain forms patterns and connections based on how their parents use language. Though it may seem humans are hardwired to listen and understand, we have actually been training our entire lives to develop this so-called natural ability. Speech recognition technology works in essentially the same way. Whereas humans have refined our process, we are still figuring out the best practices for computers. We have to train them in the same way our parents and teachers trained us. And that training involves a lot of innovative thinking, manpower, and research.” – Globalme, Mar. 2018

Virtual agents (or assistants) are common in customer service and support, and as a smart home manager. The technology is evolving from basic chatbots to advanced assistants that seamlessly network with humans. A combination of natural language processing and artificial intelligence is making it possible for a virtual assistant to possess large volumes of knowledge and solve many more problems. Alexa and Siri are examples of how the basic chatbot is evolving into an interactive application.

IBM is turning its cognitive computing platform Watson into a new voice assistant for enterprises… “The Watson Assistant combines two Watson services — Watson Conversation and Watson Virtual Agent — to offer businesses a more advanced conversational experience that can be pre-trained on a range of intents… IBM also stresses that enterprises retain ownership of their data. This is different from consumer facing assistants, alluding to but not specifically calling out Amazon and its Alexa assistant. The world is ready for the next step in conversational interfaces.” – ZDNet, Mar. 2018

An IBM computer debates humans, and wins, in a new, nuanced competition… “AI isn’t just winning at board games. Now it is learning the art of persuasion… IBM created a system called Project Debater that competes in what the company calls computational argumentation — knowing a subject, presenting a position and defending it against opposition. At a press event, IBM pitted the system against two humans with a track record of winning debates. In one debate, Noa Ovadia overall nudged two people among a few dozen in a human audience toward her perspective that governments should not subsidize space exploration. But in the second, Project Debater soundly defeated Dan Zafrir, pulling nine audience members toward its stance that we should increase the use of telemedicine… Project Debater did demonstrate that artificial intelligence can handle some complexities of human interaction, not just the clear-cut rules and victories of a board game or game show.” – CNET, Jun. 2018

AlterEgo: Interfacing with devices through silent speech – MIT, Apr. 2018

Machine learning platforms automate and accelerate the delivery of predictive applications that are capable of processing big data by using machine learning. The basic principle of machine learning is to build algorithms that can receive input data and use statistical analysis to predict an output while updating as new data becomes available.

What are Data Science and Machine-Learning Platforms… “A cohesive software application that offers a mixture of basic building blocks essential both for creating many kinds of data science solution and incorporating such solutions into business processes, surrounding infrastructure and products. Machine learning is a popular subset of data science that warrants specific attention when evaluating these platforms.” – Gartner Peer Insights, Jun. 2018

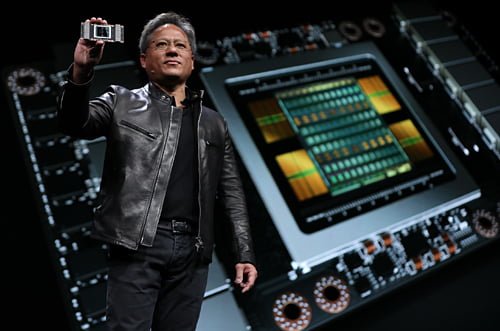

AI-optimized hardware: Graphics processing units (GPUs) designed to run AI-oriented computations. They are currently making a huge difference by bringing AI to your desktop and deep learning applications.

WELCOME TO THE ERA OF AI – NVIDIA TESLA V100… “Finding the insights hidden in oceans of data can transform entire industries, from personalized cancer therapy to helping virtual personal assistants converse naturally and predicting the next big hurricane. NVIDIA® Tesla® V100 is the most advanced data center GPU ever built to accelerate AI, HPC, and graphics. It is powered by NVIDIA Volta architecture, comes in 16 and 32GB configurations, and offers the performance of up to 100 CPUs in a single GPU. Data scientists, researchers, and engineers can now spend less time optimizing memory usage and more time designing the next AI breakthrough.” – Nvidia, May 2017

Forget algorithms. The future of AI is hardware… “Machine learning applications, particularly in pattern recognition require massive parallel processing. When Google announced that its algorithms were able to recognize images of cats, what they failed to mention was that its software needed 16,000 processors to run in order to do so. That is not too much of an issue when you can run your algorithms on a server farm in the cloud, but what if you had to run them on a tiny mobile device. This is increasingly becoming a major industry need. Running advanced machine learning capabilities at the end-point confers huge advantages to users, and solves for many data privacy issues as well. Imagine if Siri, for example, did not need to make cloud calls but was able to process all data and algorithms on the hardware of your smart phone.” – Huffington Post, Jan. 2018

Groundbreaking AI, at Your Desk… “Now you can get the computing capacity of 400 CPU’s, in a workstation that conveniently fits under your desk, drawing less than 1/20th the power. NVIDIA® DGX Station™ delivers incredible deep learning and analytics performance designed for the office and whisper quiet with only 1/10th the noise of other workstations. Data scientists and AI researchers can instantly boost their productivity with a workstation that includes access to optimized deep learning software and runs popular analytics software.” – Nvidia

NVIDIA Boosts World’s Leading Deep Learning Computing Platform, Bringing 10x Performance Gain in Six Months… “Key advancements to the NVIDIA platform — which has been adopted by every major cloud-services provider and server maker — include a 2x memory boost to NVIDIA® Tesla® V100, the most powerful datacenter GPU, and a revolutionary new GPU interconnect fabric called NVIDIA NVSwitch™, which enables up to 16 Tesla V100 GPUs to simultaneously communicate at a record speed of 2.4 terabytes per second. NVIDIA also introduced an updated, fully optimized software stack. Additionally, NVIDIA launched a major breakthrough in deep learning computing with NVIDIA DGX-2™, the first single server capable of delivering two petaflops of computational power. DGX-2 has the deep learning processing power of 300 servers occupying 15 racks of datacenter space, while being 60x smaller and 18x more power efficient.” – EconoTimes, Mar. 2018

The world’s fastest supercomputer is back in America… “With a peak performance of 200 petaflops, or 200,000 trillion calculations per second, Summit more than doubles the top speeds of TaihuLight, which can reach 93 petaflops. Summit is also capable of over 3 billion mixed precision calculations per second, or 3.3 exaops, and more than 10 petabytes of memory, which has allowed researchers to run the world’s first exascale scientific calculation. The $200 million supercomputer is an IBM AC922 system utilizing 4,608 compute servers containing two 22-core IBM Power9 processors and six Nvidia Tesla V100 graphics processing unit accelerators each.” – The Verge, Jun. 2018

Deep learning platforms consist of software algorithms combined with neural networks and multiple conceptual layers. The platforms are useful across the board in AI. Common applications are pattern recognition and classification that require large data sets.

The Neuromorphic Memory Landscape for Deep Learning… “There are several competing processor efforts targeting deep learning training and inference but even for these specialized devices, the old performance ghosts found in other areas haunt machine learning as well. Some believe that the way around the specter of Moore’s Law as well as Dennard scaling and data movement limitations is to start thinking outside of standard chip design and look to the human brain for inspiration. This idea has been tested out with various brain-inspired computing devices, including IBM’s TrueNorth chips among others over the years. However, deep learning presents new challenges architecturally and in terms of software development for such devices.” – The Next Platform, Jun. 2018

Is There a Smarter Path to Artificial Intelligence? Some Experts Hope So… “Deep learning algorithms train on a batch of related data — like pictures of human faces — and are then fed more and more data, which steadily improve the software’s pattern-matching accuracy. Although the technique has spawned successes, the results are largely confined to fields where those huge data sets are available and the tasks are well defined, like labeling images or translating speech to text… The technology struggles in the more open terrains of intelligence — that is, meaning, reasoning and common-sense knowledge. While deep learning software can instantly identify millions of words, it has no understanding of a concept like ‘justice,’ ‘democracy’ or ‘meddling.’ Researchers have shown that deep learning can be easily fooled. Scramble a relative handful of pixels, and the technology can mistake a turtle for a rifle or a parking sign for a refrigerator.” – NYTimes, Jun. 2018

Decision management revolves around information systems that originate from within the operations of a company. By combining AI and existing decision management systems, the decision-making process can be taken to new levels. An example is customer data translated into predictive models of key trends, which assist marketing and consumer departments in targeting their advertising efforts.

How AI and machine learning can improve business decision-making… “One key area where AI and machine learning can create value in companies today is the acceleration of the decision-making process. A greater volume and variety of data, more affordable data storage solutions and greater computational processing power are giving machine learning technologies the ability to analyze vast data sets in a way that deliver more accurate results, more swiftly. Due to the size and complexity of these data sets, machine learning can help unlock value from all this data in a way that humans cannot. As a result, machine learning is now able to guide better business decisions and more intelligent courses of action with minimal human intervention.” – K2, Oct. 2017

ARTIFICIAL INTELLIGENCE AND TEAM SCIENCE… “Predictive data analytics as a service (DAaaS) augmented by interdisciplinary panels of seasoned professionals to help our clients navigate challenging global markets.” – Meraglim

Robotic process automation (RPA) is used when it may be too expensive or inefficient for humans to execute a task or process. Robotic process automation attempts to duplicate human actions. By taking on repeatable tasks, software robots can vastly reduce costs, improve process quality and consistency, and increase scalability. This is not about physical robots, but rather software that resides on a PC and interacts with business applications.

Robotic process automation: The new IT job killer?… “A quiet revolution with a potential impact on the IT workforce reminiscent of outsourcing may be under way in the form of robotic process automation. Geared toward automating a variety of business and computing processes typically handled by humans, RPA will stir passions at organizations that deploy the technology, with its potential to slash jobs, shake up the relevant skills mix, and if implemented strategically, stave off the specter of outsourcing.” – InfoWorld, Mar. 2015

Robots could replace as many as 10,000 jobs at Citi… “In an interview with the Financial Times, Jamie Forese, the president of Citi and chief executive of the bank’s institutional clients group said operational positions in particular, which account for more than 40% of the bank’s total employees, are the most fertile for machine processing.” – FOX Business, Jun. 2018

- Computer engineers now make up a quarter of Goldman Sachs’ workforce – CNBC, Apr. 2018

- Commerzbank Replacing Human Research Analysts With Artificial Intelligence – ZeroHedge, Jun. 2018

- The Rise Of The Robo-Advisor: How Fintech Is Disrupting Retirement – ValueWalk, Jun. 2018

- The Digital Transformation Of Accounting And Finance – AI, Robots And Chatbots – Forbes, Jun. 2018

Text analytics and NLP are a powerful combination. Text analytics is a technique where words are counted, grouped, and summarized into categorical statistics. Two common uses are the sorting of documents and classification algorithms that keep spam out of your email. NLP techniques apply knowledge about the structure of language to extract the names of entities, such as companies, products, and locations, as well as the relationships between entities and characteristics. Individually or combined, HFT algorithms extract words from mainstream news headlines and immediately execute stock market trades. Now you know why the reaction to a specific stock or industry-related event aggressively alters a price trend within a microsecond of the news. One area of text analytics that is overlooked at times is sentiment analysis. The goal is to categorize the attitude of a vocal comment or textual headline and article. Basic sentiment can be broken down into positive, neutral, and negative. One drawback to basic analytics is when slang or sarcasm appear and algorithms can overlook or completely misinterpret the context of a statement. Sorting through such a challenge is extremely important for trading-based algorithms.

Machine Learning Text Analysis Unlocks the Power of Natural Language Processing… “We are now however on the cusp of a new era in computing, one where we interact with machines as if we were talking to another human, using text or voice. This evolution will see technology, like machine learning text analysis, facilitate natural language processing (NLP) to allow us talk to the machines just like we would talk to one another.” – Samsung Insights, Sep. 2017

Biometric solutions include physical and behavioral traits for identification and recognition. They include but are not limited to touch, visual, speech, and body language, and they’re typically applied to security or access control across businesses and governments. The U.S. Government is aggressively funding advanced research.

“Biometric systems allow for personal identification based upon fundamental biometric features that are unique and time invariant, such as features derived from fingerprints, faces, irises, retinas, and voices. Biometric systems are composed of complex hardware and software designed to measure a signature of the human body, compare the signature to a database, and make a decision based on this matching process.” – West Virginia University Undergraduate Program

Biometrics: Are they becoming the nirvana of personal security? – Biometric Update, Jun. 2018

Where does quantum computing fit into all of the above? The following article and interview will help put it all together.

- If you think AI is terrifying wait until it has a quantum computer brain – TNW, Dec. 2017

- Quantum Computing Expert Explains One Concept in 5 Levels of Difficulty – WIRED, Jun. 2018

Today’s headline image created by Peter Harrison.

Plan Your Trade, Trade Your Plan

TraderStef on Twitter

Website: TraderStef.com